UPDATE

- 2024.04.01: DARTset could be easily cloned from Huggingface/Dataset at DARTset

- 2022.09.16: DART got accepted by NeurIPS 2022 - Datasets and Benchmarks Track!

- 2022.09.29: DART's GUI source code publicly available at Unity GUI source code!

Abstract

Video

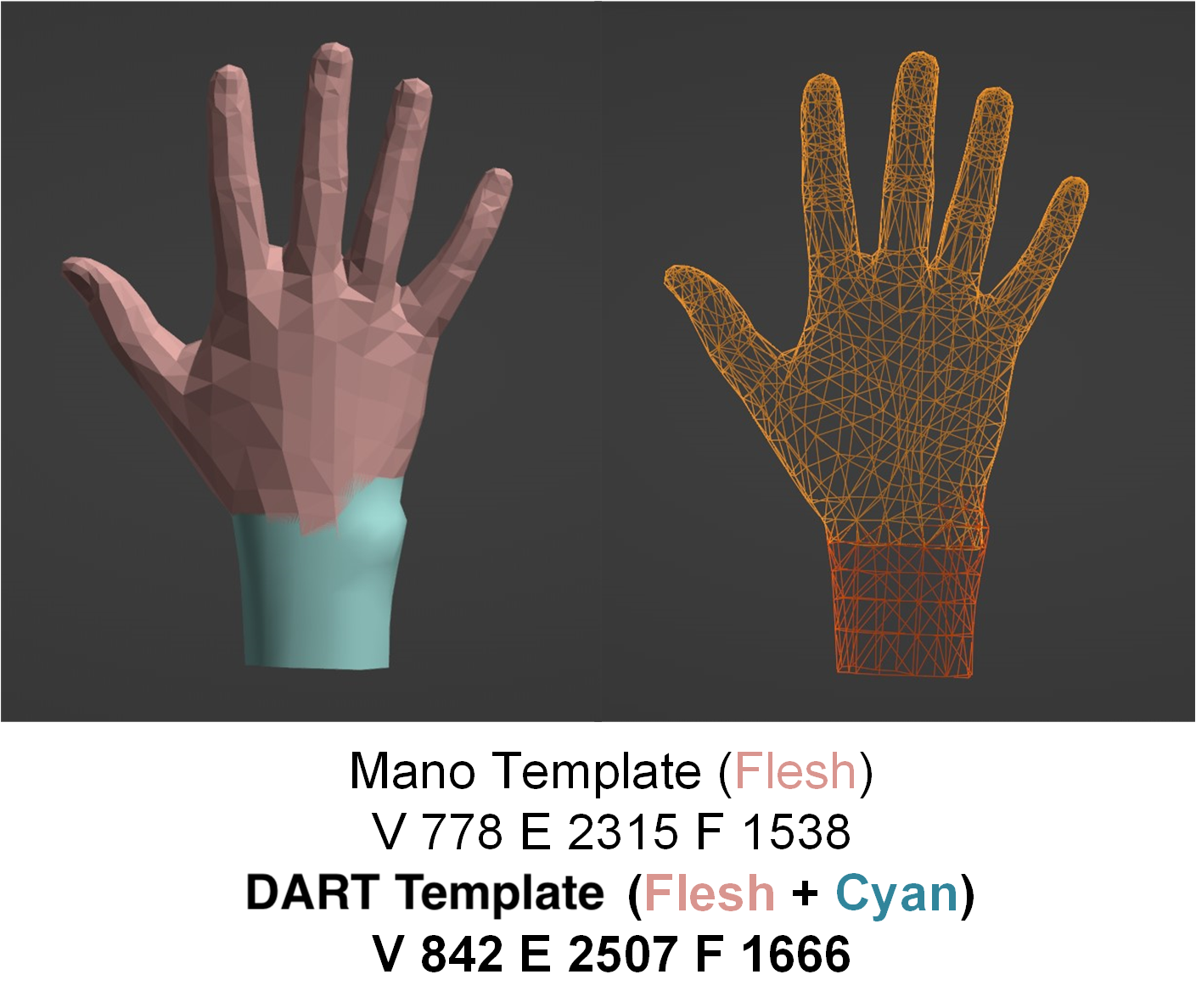

Comparison between DART and MANO basic topology.

How to use DART tool in generating your own data?

Step1: click

DART

GUI & Code

and download Build_Hand.zip, unzip and

execute Hand.exe .

Step2: Pose Editing: allow arbitrarily, illumination, accessory,

skin color and other terms.

Step3: Exporting: rendered image with GT(mano pose, 2d/3d joint

are put into the output.pkl)

DARTset Datasheet & Explanation

Dataset Structure

-

Rendered Image: with background (384x384),

without background RGBA (512x512).

-

Annotation: 2D/3D positions for 21 keypoints of the

hand, MANO pose, vertex locations.

-

Visualization & Code:

https://github.com/DART2022/DARTset

-

DARTset Download:

HuggingFace Page (Train & Test)

DARTset

is composed of train and test. The folder of each

is described as below.

Train set

* Train: 729,189 single hand frames, 25% of which are wearing accessories.

Test set

* Test (H): 288,77 single hand frames, 25% of which are wearing accessories.

Total set

* DARTset: 758,066 single hand frames. Noteworthy here, we conduct experiments on full 800K DARTset and filter out ~42,000 of images which left wrist unsealed on the final version.

Pose sampling

we use spherical linear interpolation (Slerp) in pose and root

rotation sampling. Among these hands, ~25% are assigned an

accessory. In DARTset, basic UV map (skin tones, scars, moles,

tattoos) and accessories are all uniformly sampled, the number

of their corresponding renders are roughly equal.

Dataset Creation

Texture map (4096 x 4096) are all created manually by 3D artists. GUI and batch data generator is programmed by DART's authors.

- (a) Pose Sampling: In DART, we totally sampled 800K pose, 758K of them are valid. For each pose, we adopt synthetic pose θs from A-MANO and randomply picked 2,000 pose from FreiHand θr, through calculate the difference between θs and θr, we select the one θr_max that differs most from θs. Then we interpolate 8 rotations from θr_max to θs by spherical linear interpolation (Slerp). For more detail, please check the paper.

- (b) Mesh Reconstruction & Rendering: After part (a), we have N x (16,3) MANO poses (N=758K). We input those poses into our revised MANO layer directly. Through which we can easily get DART mesh (MANO mesh plus extra wrist) with 842 vertices and 1,666 faces.

- (c) Rendering: We adopt Unity and our revised SSS skin shader for rendering. During the rendering pipeline, we randomly select the illumination (intensity, color and position), texture (including accessory). After rendering, we have the final image and 2D reprojected landmarks.

- (d) Ground Truth Data: At part (a) and (b), we already gather all 3D related ground truth for monocular 3D pose estimation & hand tracking yet leave 2D landmark untouched. After the rendering process (part (c)), we obtain the 2D landmark and inject it to the ground truth data file. Since our method in creating DARTset is build on top of the ground truth annotations, so the ground truth annotations are born with renderings.

Considerations for Using the Data

-

Social Impact: No ethical issues because DART do not

involve any human biological information.

-

Gender Biases: Since our basic mesh topology is FIXED

(we focus more on pose rather than shape), which means the

mesh itself is gender-agnostic. In our data generation

pipeline, both texture maps and accessories in DARTset are

randomly sampled. Considering this, we do not think it may

involve extreme gender bias in DARTset.

Additional Information

-

Dataset Curator:

Daiheng Gao, daiheng.gdh@alibaba-inc.com

-

Licensing Information: Codes are MIT license.

GUI(Unity) tools are CC BY-NC 4.0 license. Dataset is CC

BY-NC-ND 4.0 license.

Acknowledgements

@inproceedings{gao2022dart,

title={{DART: Articulated Hand Model with Diverse Accessories and Rich Textures}},

author={Daiheng Gao and Yuliang Xiu and Kailin Li and Lixin Yang and Feng Wang and Peng Zhang and Bang Zhang and Cewu Lu and Ping Tan},

booktitle={Thirty-sixth Conference on Neural Information Processing Systems Datasets and Benchmarks Track},

year={2022},

}

The website template was borrowed from

Michaël Gharbi.